TABLE OF CONTENTS

Use Case Scenarios

In Part 1 of Deployment Strategies for Kubernetes Workloads, we covered the theoretical aspects of Blue Green and Canary deployment strategies. In this article, we’ll explore the practical steps required to implement Blue Green and Canary deployments.

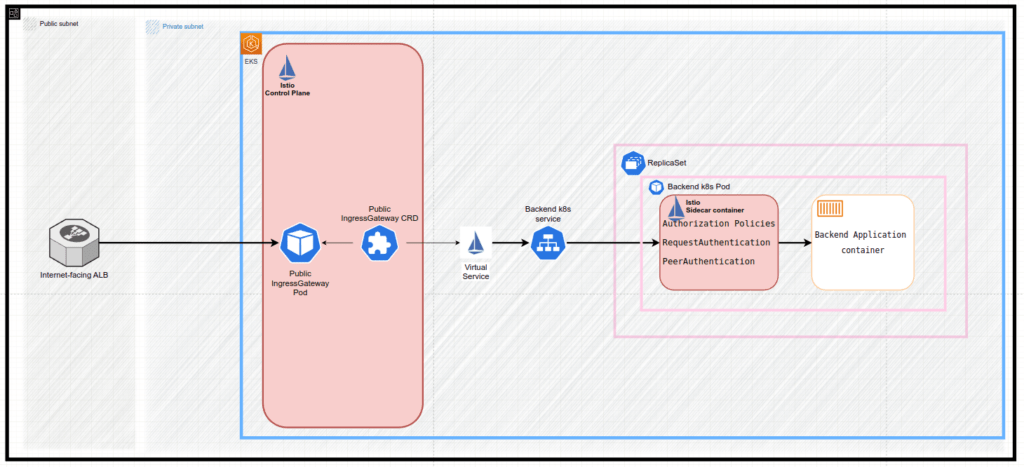

Consider the following application architecture:

- AWS EKS Kubernetes cluster with application workload:

- including Deployments, ReplicaSets, Pods, Services and other Kubernetes objects

- ArgoCD as the GitOps continuous delivery tool

- Istio Service Mesh as the traffic management is handled by

- including IngressGateway, VirtualService and other Istio objects

- Public AWS ALB as the entry point to the AWS EKS application workload

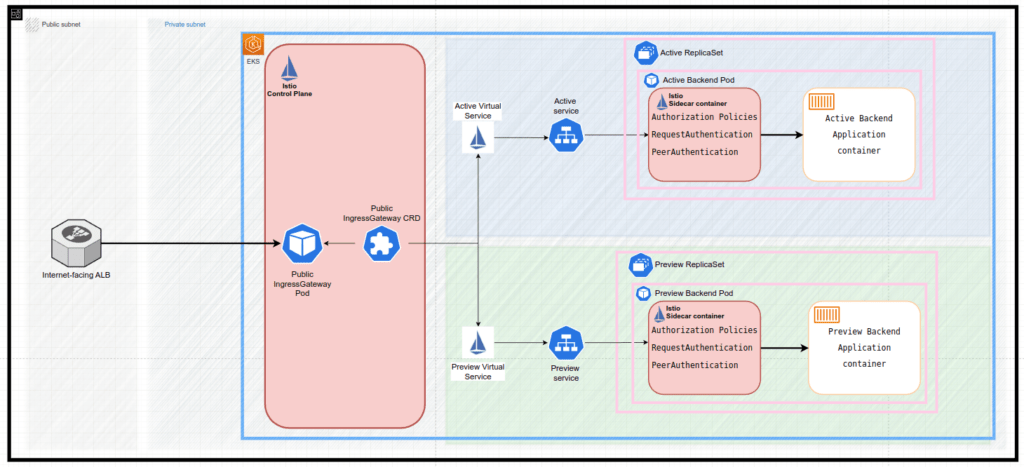

BLUE GREEN SCENARIO

In order to articulate and form the basic knowledge of how Blue Green deployment works for the workloads in AWS EKS, let’s consider the basic and simplest use case scenario with the following requirements:

- we need to deploy a new app version by using Argo Rollouts only (even though it can work with standard kubernetes Deployments in parallel)

- once the new app version is deployed:

- the users traffic should still be sent to the old app version

- the new app version should be available by https for UAT

- the users traffic should be promoted to the new app version manually

- once manually approved, the users traffic will be promoted to the new app version and the old app version is destroyed

To accomplish that we need to deploy the following Kubernetes objects to the AWS EKS:

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: app

...

strategy:

blueGreen:

activeService: app

previewService: "app-preview"

autoPromotionEnabled: false- Active AND Preview Service objects

apiVersion: v1

kind: Service

metadata:

name: app

...

spec:

type: ClusterIP

ports:

- port: 80

targetPort: 5000

protocol: TCP

name: http

selector:

app.kubernetes.io/name: app

---

apiVersion: v1

kind: Service

metadata:

name: app-preview

...

spec:

type: ClusterIP

ports:

- port: 80

targetPort: 5000

protocol: TCP

name: http

selector:

app.kubernetes.io/name: app- Active and Preview Istio virtual service objects

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: app

spec:

hosts:

- app-api.test.com

gateways:

- istio-system/ingressgateway

http:

- name: backend-routes

match:

- uri:

prefix: /

route:

- destination:

host: app

port:

number: 80

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: app-preview

spec:

hosts:

- app-api-preview.test.com

gateways:

- istio-system/ingressgateway

http:

- name: backend-routes

match:

- uri:

prefix: /

route:

- destination:

host: app-preview

port:

number: 80

The following describes the sequence of events that happen during a Blue Green update:

- ReplicaSet with active app (Active ReplicaSet object) is pointed to by both the Active Service object and Preview Service object:

- it is achieved by injecting a unique hash of the ReplicaSet to these services’ selectors, i.e rollouts-pod-template-hash: 6cb484f69f

- This allows the rollout to define an active and preview stack and a process to migrate replica sets from the preview to the active

## Active ReplicaSet object: apiVersion: apps/v1 kind: ReplicaSet metadata: name: app-6cb484f69f ... labels: app.kubernetes.io/name:app rollouts-pod-template-hash: 6cb484f69f ... ## Active Service object apiVersion: v1 kind: Service metadata: name: app ... selector: app.kubernetes.io/name: app rollouts-pod-template-hash: 6cb484f69f ... ## Preview Service object apiVersion: v1 kind: Service metadata: name: app-preview ... selector: app.kubernetes.io/name: app rollouts-pod-template-hash: 6cb484f69f ...

- Istio Virtual services Active Istio Virtual Service object and Preview Istio Virtual Service object pointed to the Active Service object and Preview Service object accordingly:

## Active Istio Virtual Service object apiVersion: networking.istio.io/v1alpha3 kind: VirtualService ... name: app ... spec: gateways: - istio-system/ingressgateway hosts: - app-api.test.com http: - match: - uri: prefix: / name: backend-routes route: - destination: host: app port: number: 80 ## Preview Istio Virtual Service object apiVersion: networking.istio.io/v1alpha3 kind: VirtualService ... name: app-preview ... spec: gateways: - istio-system/ingressgateway hosts: - app-api-preview.test.com http: - match: - uri: prefix: / name: backend-routes route: - destination: host: app-preview port: number: 80 - The new application is being built by the CI tool and delivered by ArgoCD, so the spec.template in the Rollout object is changed.

- The Rollout Controller identifies the changes in Rollout object and creates new objects in the AWS EKS:

- The new

Preview ReplicaSet objectis created - A new unique hash is assigned to the Preview ReplicaSet object label, i.e rollouts-pod-template-hash: 8fbb965f6

## Preview ReplicaSet object: apiVersion: apps/v1 kind: ReplicaSet metadata: name: app-8fbb965f6 ... labels: app.kubernetes.io/name: app rollouts-pod-template-hash: 8fbb965f6 ... Preview ReplicaSet objectcreates relevant pods with the new app release.

- The new

- The

Preview Service objectis modified to point to thePreview ReplicaSet object.## Preview Service object apiVersion: v1 kind: Service metadata: name: app-preview ... selector: app.kubernetes.io/name: app rollouts-pod-template-hash: 8fbb965f6 ... - The

Active Service objectremains pointing to theActive ReplicaSet object - The

Preview Virtual Service objectremains pointing to thePreview Service object - The

Active Virtual Service objectremains pointing to theActive Service object - Since the Rollout configuration

autoPromotionEnabledis set tofalsethe Blue/Green deployment pauses. - Developers could reach the new app release and make UAT by using the endpoint defined in the

Preview Virtual Service object:api-preview.test.com- Additional inner-services communication inside EKS cluster is available via the services internal DNS name:

app-preview.app-service

- Additional inner-services communication inside EKS cluster is available via the services internal DNS name:

- The rollout is either resumed manually by a user:

- By issuing the

kubectl argo rollouts promote app -n app-service - The rollout “promotes” the new app release by updating the

Active Service objectto point to thePreview ReplicaSet object. At this point, there are no services pointing to old app release viaActive ReplicaSet object## Active Service object apiVersion: v1 kind: Service metadata: name: app ... selector: app.kubernetes.io/name: app rollouts-pod-template-hash: 8fbb965f6 ... - After waiting scaleDownDelaySeconds (default 30 seconds), the Active ReplicaSet object is being destroyed, leading to the removal of old app release pods accordingly

- All the traffic is being routed to the new app release through by Active Istio Virtual Service object → Active Service object → Active (previously Preview) ReplicaSet object → new release app pods It can be also reached by Preview Istio Virtual Service object → Preview Service object → Preview (previously Preview) ReplicaSet object → new release app pods

- By issuing the

- OR the rollout is undone manually by a user

- By issuing the

kubectl argo rollouts undo app -n app-service - The rollout “undone” the new app release by updating the

Preview Service objectto point to theActive ReplicaSet object. At this point, there are no services pointing to new app release viaPreview ReplicaSet object## Preview Service object apiVersion: v1 kind: Service metadata: name: app-preview ... selector: app.kubernetes.io/name: app rollouts-pod-template-hash: 6cb484f69f ... - After waiting

scaleDownDelaySeconds(default 30 seconds), thePreview ReplicaSet objectis being destroyed, leading to the removal of new release pods accordingly

- By issuing the

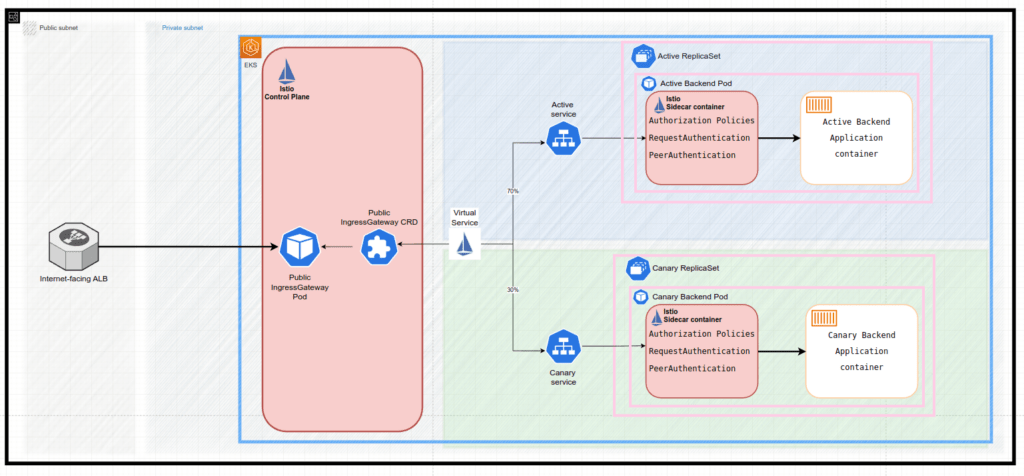

Canary Scenario

In order to articulate and form the basic knowledge of how Canary deployment works for the workloads in AWS EKS, let’s consider the basic and simplest use case scenario with the following requirements:

- we need deploy new app version by using Argo Rollouts only (even though it can work with standard kubernetes Deployments in parallel)

- the users traffic should be gradually shifted to the new app version

- each iteration of the shifting users traffic should be performed manually

- new app version should be available by https for UAT

To accomplish that we utilize the Host-level Traffic Splitting Canary Deployment, which relies on splitting traffic between two Istio destinations (i.e. Kubernetes services) – a Active Service and a Canary Service.

Using this approach, it is required to deploy to the AWS EKS the following kubernetes objects:

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: app

...

strategy:

canary:

canaryService: app-canary

stableService: app

steps:

- setWeight: 30

- pause: {}

trafficRouting:

istio:

virtualService:

name:app-canary

routes:

- backend-routes- Active AND Canary Service objects

apiVersion: v1

kind: Service

metadata:

name: app

...

spec:

type: ClusterIP

ports:

- port: 80

targetPort: 5000

protocol: TCP

name: http

selector:

app.kubernetes.io/name: app

---

apiVersion: v1

kind: Service

metadata:

name: app-canary

...

spec:

type: ClusterIP

ports:

- port: 80

targetPort: 5000

protocol: TCP

name: http

selector:

app.kubernetes.io/name: app

- Canary Istio virtual service objects

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: app-canary

spec:

hosts:

- app-api.test.com

gateways:

- istio-system/ingressgateway

http:

- name: backend-routes

match:

- uri:

prefix: /

route:

- destination:

host: app

port:

number: 80

weight: 100

- destination:

host: app-canary

port:

number: 80

weight: 0

The following describes the sequence of events that happen during a Canary update:

- ReplicaSet with active app (Active ReplicaSet object) is pointed to by both the Active Service object and Canary Service object:

- it is achieved by injecting a unique hash of the ReplicaSet to these services’ selectors, i.e rollouts-pod-template-hash: 6cb484f69f

- This allows the rollout to define an active and Canary stack and a process to migrate replica sets from the Canary to the active.

## Active ReplicaSet object: apiVersion: apps/v1 kind: ReplicaSet metadata: name: app-6cb484f69f ... labels: app.kubernetes.io/name: app rollouts-pod-template-hash: 6cb484f69f ... ## Canary Service object apiVersion: v1 kind: Service metadata: name: app ... selector: app.kubernetes.io/name: app rollouts-pod-template-hash: 6cb484f69f ... ## Canary Service object apiVersion: v1 kind: Service metadata: name: app-canary ... selector: app.kubernetes.io/name: app rollouts-pod-template-hash: 6cb484f69f ...

- Istio Virtual services

Active Istio Virtual Service objectis pointed to theActive Service objectandCanary Service objectwith weight of 100 and 0 respectively:## Active Istio Virtual Service object apiVersion: networking.istio.io/v1alpha3 kind: VirtualService ... name: app ... spec: gateways: - istio-system/ingressgateway hosts: - app-api.test.com http: - match: - uri: prefix: / name: backend-routes route: - destination: host: app port: number: 80 weight: 100 - destination: host: app-canary port: number: 80 weight: 0 - The new application is being built by CI tool and delivered by ArgoCD, so the

spec.templatein the Rollout object is changed. - ArgoCD identifies the changes and creates new objects in the EKS

- The new

Canary ReplicaSet objectis created - A new unique hash is assigned to the

Canary ReplicaSet objectlabel, i.erollouts-pod-template-hash: 8fbb965f6## Canary ReplicaSet object: apiVersion: apps/v1 kind: ReplicaSet metadata: name: app-8fbb965f6 ... labels: app.kubernetes.io/name: app rollouts-pod-template-hash: 8fbb965f6 ... - Canary ReplicaSet object creates relevant pods with the new app release.

- The new

- The

Canary Service objectis modified to point to theCanary ReplicaSet object.## Canary Service object apiVersion: v1 kind: Service metadata: name: app-canary ... selector: app.kubernetes.io/name: app rollouts-pod-template-hash: 8fbb965f6 ... - The

Active Service objectremains pointing to theActive ReplicaSet object - The rollout, based on its configuration of canary steps

- setWeight: 30modifiesActive Istio Virtual Serviceto route 70% of traffic to theActive Service objectand 30% – to theCanary Service object## Active Istio Virtual Service object apiVersion: networking.istio.io/v1alpha3 kind: VirtualService ... name: app ... spec: gateways: - istio-system/ingressgateway hosts: - app-api.test.com http: - match: - uri: prefix: / name: backend-routes route: - destination: host: app port: number: 80 weight: 70 - destination: host: app-canary port: number: 80 weight: 30 - Since the Rollout configuration

- pause: {}is set to{}the Canary deployment pauses. - By employing any kind of application monitoring/logging solution, developers could evaluate how the users experience changes with the new app version.

- Additional inner-services communication inside EKS cluster is available via the services internal DNS name:

amnt-app-canary.amnt-service

- Additional inner-services communication inside EKS cluster is available via the services internal DNS name:

- The rollout is either resumed manually by a user:

kubectl argo rollouts promote app -n app-service- The rollout “promotes” the new app release by updating the

Active Service objectto point to theCanary ReplicaSet object. At this point, there are no services pointing to old app release viaActive ReplicaSet object## Active Service object apiVersion: v1 kind: Service metadata: name: app ... selector: app.kubernetes.io/name: app rollouts-pod-template-hash: 8fbb965f6 ... - The rollout modifies

Active Istio Virtual Serviceto route back 100% of traffic to theActive Service objectand 0% – to theCanary Service object(both point to the sameActive ReplicaSet objectagain) - After waiting

scaleDownDelaySeconds(default 30 seconds), theActive ReplicaSet objectis being destroyed, leading to the removal of old app release pods accordingly

- OR the rollout is undone manually by a user

kubectl argo rollouts undo app -n app-service- The rollout “undone” the new app release by updating the

Canary Service objectto point to theActive ReplicaSet object. At this point, there are no services pointing to new app release viaCanary ReplicaSet object## Canary Service object apiVersion: v1 kind: Service metadata: name: app-preview ... selector: app.kubernetes.io/name: app rollouts-pod-template-hash: 6cb484f69f ... - The rollout modifies

Active Istio Virtual Serviceto route back 100% of traffic to theActive Service objectand 0% – to theCanary Service object(both point to the sameActive ReplicaSet objectagain) - After waiting

scaleDownDelaySeconds(default 30 seconds), theCanary ReplicaSet objectis being destroyed, leading to the removal of new release pods accordingly

Canary Deployment Challenges

It’s worth noting the challenges that arise when implementing a Canary deployment:

- DNS requirements

- With host-level splitting, the VirtualService requires different

hostvalues to split among the two destinations. However, using two host values implies the use of different DNS names (one for the canary, the other for the stable). For north-south traffic, which reaches the Service through the Istio Gateway, having multiple DNS names to reach the canary vs. stable pods may not matter. However, for east-west or intra-cluster traffic, it forces microservice-to-microservice communication to choose to hit the stable or the canary internal DNS names respectively.

- With host-level splitting, the VirtualService requires different

- Integrating with GitOps

- Defining the initial canary and stable weight of 0 and 100 in the Istio Virtual Service object introduces a problem if the GitOps is implemented in the CD. To elaborate, since a Rollout will modify these VirtualService weights as the Rollout progresses through its steps, it unfortunately causes the VirtualService to become OutOfSync with the version in git.

- Additionally, if the VirtualService in git were to be applied while the Rollout is in this state (splitting traffic between the services), the apply would revert the weights back to the values in git (i.e. 100 to stable, 0 to canary).

- for the resolution see the Maintain difference in cluster and git values for specific fields · Issue #2913 · argoproj/argo-cd

Conclusion

In this article, we explored deployment strategies such as blue-green and canary, using the example of deploying applications in AWS EKS with Argo Rollouts.

The article covers the examples of the implementation of basic scenarios, which can serve as a starting point for developing more complex scenarios.

By: Pavel Luksha, Senior DevOps Engineer