TABLE OF CONTENTS

Introduction

Whenever a modern application needs to expose data or business logic to the outside world, the first question is not the code itself. Instead, teams immediately run into the operational realities of traffic management: how do we secure dozens of endpoints, authenticate a variety of requestors, make sure we are protected against traffic spikes, roll out new versions without breaking anything, log every single request for auditing and, above all, ensure the whole thing scales from zero to hundreds of thousands of requests per second without melting down or costing a fortune.

Amazon API Gateway is AWS’s purpose‑built answer to these challenges. Sitting in front of your compute – whether that’s Lambda functions, containers in ECS or EKS, EC2 instances, on‑prem servers accessed through AWS Direct Connect or even another AWS managed service – API Gateway terminates HTTPS, routes requests, enforces authorization, meters usage, and surfaces observability signals, all while elastically scaling at the edge.

API Gateway comes in three distinct flavours so that we only pay for the capabilities you actually need:

- HTTP APIs – the streamlined, lower‑latency edition that is roughly 70 % cheaper than its bigger sibling. It might be used when all we need is JWT or Lambda authorizers, straightforward routing and basic CloudWatch metrics.

- REST APIs – the feature-complete classic. If your project requires fine-grained request/response transformation templates, usage plans, API keys, AWS WAF integration, caching, or X-Ray tracing, this is the tool of choice

- WebSocket APIs – when a workload demands long‑lived, bi‑directional connections – for chat, live telemetry, collaborative apps or gaming – WebSocket APIs maintain persistent channels with built‑in

$connect,$disconnectand custom route handling.

How an API Gateway Request Flows

Under the hood, every request that lands on an API Gateway endpoint follows a predictable, multi‑step journey.

First, an edge‑optimised CloudFront distribution – or a regional endpoint, if chosen – receives the encrypted call and forwards it to the route whose path and HTTP method pattern best match the request. Before any payload is allowed past this point, the gateway can execute an authorizer of your choosing (for instance a Cognito user‑pool token or a custom Lambda) to establish the caller’s identity.

Once authorised, API Gateway invokes the configured integration. An integration can target a Lambda function, an HTTP or HTTPS endpoint, a private ALB/NLB reachable through a VPC Link or almost any AWS service action (for example, writing directly to DynamoDB or publishing to SNS). Both request and response payloads may be transformed on the fly via mapping templates, giving teams enormous flexibility without touching application code.

Finally, the response is optionally cached, logged and metered before it is returned to the client. Deployments are versioned into isolated stages (such as dev, qa, and prod)enabling safe blue‑green or canary roll‑outs that shift traffic gradually and allow instant rollback.

Regardless of the flavour, every API hosted on API Gateway enjoys the same core advantages: one‑click integrations with more than a hundred AWS services or any public or VPC‑internal backend; multiple authorization options including IAM, Cognito user pools, third‑party JWTs, or bespoke Lambda authorizers; first‑class support for custom domains secured by ACM‑managed TLS certificates; exhaustive CloudWatch metrics and access logs; and zero‑downtime, blue‑green deployments via stage variables and canary releases. Because the service is serverless and fully managed, you never have to provision, patch, or pay for idle edge proxies.

In short, API Gateway provides development teams with an instant, production‑grade front door, allowing them to concentrate on building great APIs instead of wrestling with the underlying infrastructure.

Scenario Overview: Exposing Private VPC APIs to External Consumers

To illustrate these capabilities, let’s examine a reference scenario that might repeatedly surface across companies of every size.

Imagine an internal microservice‑oriented application running entirely inside a private Amazon VPC. The workloads are deployed on AWS EKS – though the same discussion would apply to ECS, self‑managed Kubernetes on EC2 or even a fleet of traditional EC2 instances. Inside the cluster, services communicate over internal REST APIs that resolve to private DNS names, yet a subset of endpoints can be reachable by external customers on the public Internet.

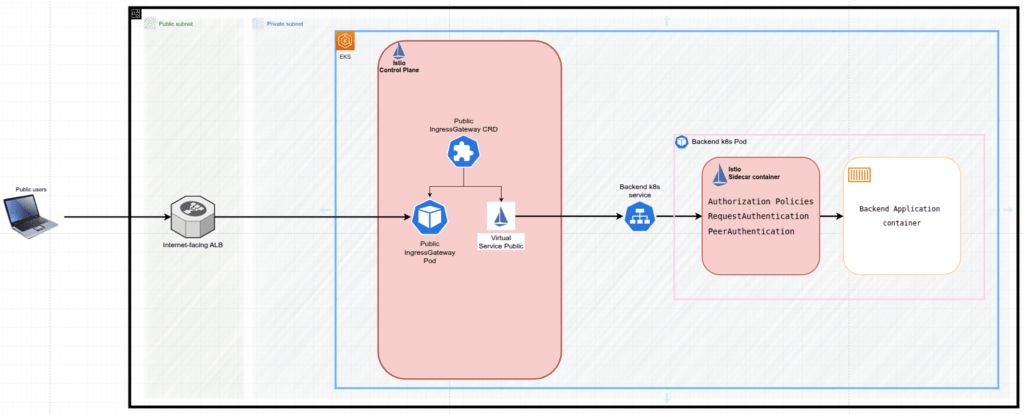

At the heart of the infrastructure sits the EKS cluster, whose worker nodes are placed in private subnets with route tables deliberately containing no path to an Internet Gateway. An Internet‑facing Application Load Balancer (ALB) anchored in the public subnets listens to public clients’ requests on port 443, terminates TLS and forwards traffic to a target group that, in turn, points at the public Istio Ingress Gateway (i.e. pods) running inside the cluster. Once inside the mesh, Istio Service Mesh enforces mutual TLS between microservices and appliesfine‑grained authorization based on JWT claims. Additionally, the public Istio Virtual Service helps us to direct requests coming from the public Istio Ingress Gateway to the certain Kubernetes Service, backing the target microservice application pods – see Figure 1.

This design represents secured infrastructure: internal traffic stays private and encrypted, while only explicitly whitelisted paths are exposed externally. Moreover, every external inbound request is authenticated via Amazon Cognito‑issued JWTs and evaluated against Istio policies long before it can reach any backend’s external enpoints.

Now let’s assume that a new business integration requires us to expose a number of internal REST endpoints that have historically been consumed exclusively by other workloads inside the EKS cluster. Until today, this traffic never goes beyond the boundaries of the VPC.

Opening those routes to the outside world introduces several non‑negotiable requirements:

- Security

- Every request must carry a validated JWT (issued by Amazon Cognito) and remain encrypted end‑to‑end via TLS or mTLS.

- Traffic governance

- Burst protection, per‑tenant rate limits and usage quotas must shield downstream services from accidental or malicious overload.

- Observability

- Each call should be logged, traced, and metered so that operations teams can diagnose issues in real time.

- Request transformation

- We may need to add or strip headers/paths, inject trace IDs or adapt payloads without touching application code.

- Predictable DNS

- The public endpoints should be resolved to a clean, certificate‑backed domain name such as

api.example.com.

- The public endpoints should be resolved to a clean, certificate‑backed domain name such as

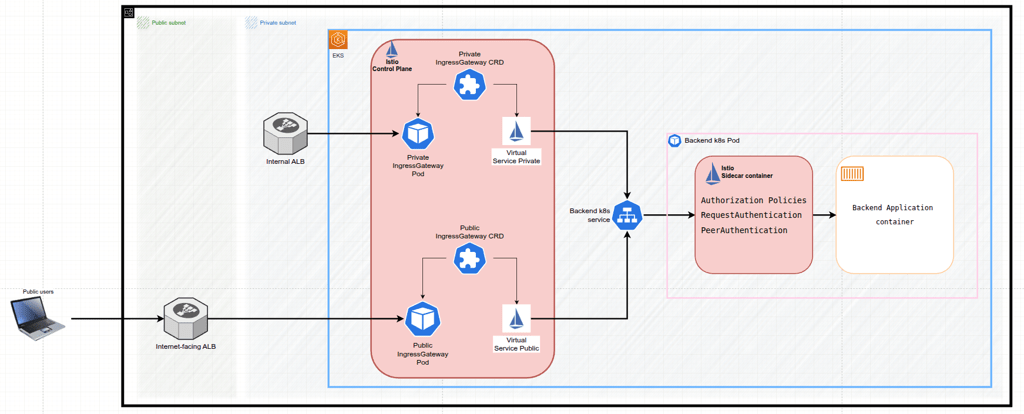

Because the application’s private APIs should be reachable only from within the VPC, the first building block is an internal Application Load Balancer (ALB) deployed to the private subnets. This internal ALB targets the private Istio Ingress Gateway pods, and Istio, via a dedicated private Istio Ingress Gateway, relays each request to the corresponding Kubernetes Service and its backing Pods – see Figure 2

By introducing the internal ALB we preserve the zero‑trust posture: no public subnets expose the workloads directly. At the same time, the ALB gives us a stable, scalable endpoint on which to anchor the next layer – Amazon API Gateway v2 (HTTP API) integrated via an API Gateway VPC Link and a Cognito pool with a database of the private customers users. API Gateway will terminate TLS for the custom domain, authenticate callers by validating every request to the applications private endpoint by comparing the JWT token data from the request with the data in Cognito, enforce throttling, emit structured logs and handle any required header manipulation, all before handing the request off to the internal ALB. To make flow even more secured, we could create a Security Group to assign it to the API Gateway VPC Link network interfaces and next allow incoming request to the Internal ALB Security Group to the port 443 only from the API Gateway VPC Link Security group.

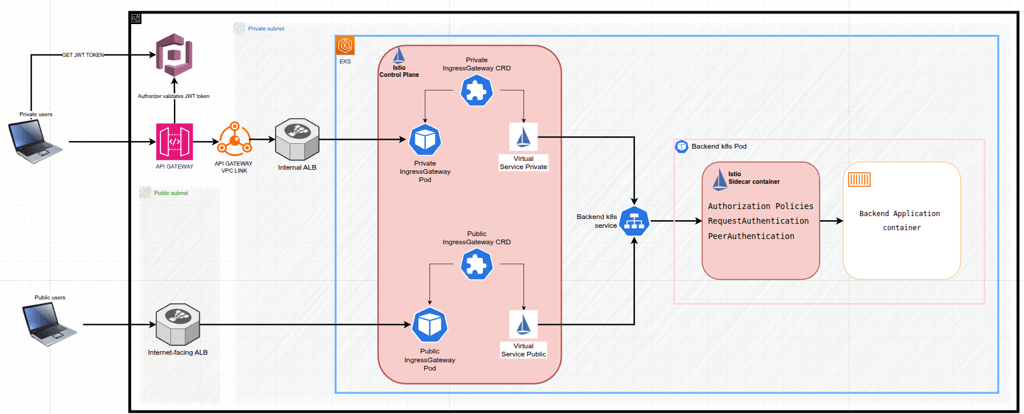

In short, the resulting flow is (see Figure 3):

- Private external client → authenticates itself in Cognito API and gets the JWT token

- Private external client → makes HTTPS request to

api.example.com(API Gateway custom domain) along with JWT token - API Gateway → makes authenticating, throttling, logging, headers/paths transformation of the requests (i.e. https://api.example.com/api/<endpoint_path> transforming to the https://<BACKEND_APPLICATION_DNS_NAME>/private/<endpoint_path>)

- VPC Link → private network tunnel into the VPC, specifically to the Internal ALB

- Internal ALB → load balances to private private Istio Ingress Gateway (AWS EKS)

- Private Istio Ingress Gateway → targets application microservice (mTLS + authorization checks) by leveraging private Istio Virtual Service

- Private Istio Virtual Service → targets applications kubernetes Service

Conclusion

By inserting Amazon API Gateway v2 as the outward‑facing front door, connected to an internal ALB through a private VPC Link, we can create a clean security and operational dividing line between the unpredictable Internet and the restricted VPC‑bound microservices applications. The pattern fulfils every modern API requirement: standards‑based authentication, granular rate limiting, zero‑trust networking, rich observability and painless blue‑green deployments without demanding any changes to the business logic itself.

From a cost and agility perspective, the architecture could scale elastically from a small portion of traffic in the early hours of a Sunday to a surge of hundreds of thousands of requests per second during a product launch, all while charging only for what is actually consumed. Meanwhile, developers and operators inherit a simplified operational model: they troubleshoot with CloudWatch Logs and Traces, dial throttling in the API Gateway console and iterate safely behind canary stages.

In short, exposing private EKS endpoints through API Gateway is not merely a workaround solution, it is a robust pattern that could accelerate partner integrations and lets teams stay focused on delivering business value rather than building edge infrastructure.

Links

Understanding VPC links in Amazon API Gateway private integrations | Amazon Web Services

By Pavel Luksha, Senior DevOps Engineer, Klika Tech, Inc.