TABLE OF CONTENTS

OVERVIEW

AWS RDS Proxy is a fully-managed, highly available and easy-to-use database proxy feature offered by AWS RDS. It is specifically designed to enhance application performance, scalability, security and resilience. This article explores the key features, benefits and practical implementation AWS RDS Proxy.

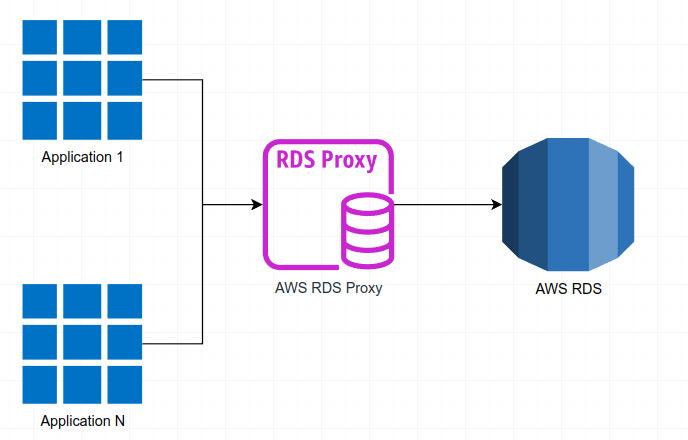

The Figure 1 represents the high-level overview of what AWS RDS Proxy is.

Basically, RDS Proxy manages the infrastructure that sits between an application and RDS, performing connection pooling and the other RDS proxy features, which will be discussed in detail later in this article.

Each RDS proxy handles connections to a single RDS DB instance. In case of a Multi-AZ DB instance or cluster, the proxy automatically detects and routes traffic to the current writer instance.

Lets take a look at the key features of the RDS proxy.

Improved scalability through connection pooling.

RDS Proxy maintains a pool of established connections to RDS database instances, which significantly reduces the overhead on database compute and memory resources. This is particularly important when new connections are rapidly created by applications. By pooling and sharing connections, RDS Proxy can efficiently manage unpredictable surges in database traffic. Without this feature, these surges might lead to issues such as connection oversubscription or overwhelming the database with new connection requests.

Additionally, RDS Proxy shares infrequently used database connections, allowing fewer direct connections to the RDS database. This enables the database to handle a large number of application connections more efficiently, helping your application scale without sacrificing performance.

Enhanced availability by reducing failover times and maintaining connections during failovers.

RDS Proxy minimizes application disruption by reducing failover times by up to 66% and preserving connections during outages. When a failover occurs, RDS Proxy automatically connects to a new database instance without disconnecting the existing application sessions. This seamless failover process significantly reduces downtime and ensures uninterrupted service.

Moreover, RDS Proxy supports Multi-AZ configurations with two readable standbys, allowing failovers to occur in under 35 seconds. It also provides 2x improved write latency, increased read capacity, and reduces downtime for minor version upgrades to typically less than 1 second.

Improved security by integrating AWS IAM authentication and securely managing credentials in AWS Secrets Manager.

RDS Proxy enhances security by offering the option to enforce IAM-based authentication for databases, removing the need for hard-coded credentials in applications. Instead of providing a username and password, IAM roles associated with services like Lambda or EC2 instances can authenticate with RDS Proxy. The database credentials used by RDS Proxy in turn are securely stored in AWS Secrets Manager, streamlining and centralizing credential management.

Alternatively, you can connect to RDS Proxy using traditional database authentication methods. The username and password provided when establishing connections to RDS Proxy are verified against the credentials stored in AWS Secrets Manager, which are then used to connect to the underlying RDS database.

WHERE DO WE NEED AWS RDS PROXY?

The table below outlines the key factors to consider when implementing RDS Proxy.

| Factor | Explanation | How RDS Proxy helps |

|---|---|---|

| Any RDS instance that encounters “too many connections” error | This indicates that more queries are being sent to the RDS instance than it can handle | RDS Proxy enables applications to open many client connections, while it manages a smaller number of long-lived connections to the RDS instance itself |

| DB instances that use smaller AWS instance classes, such as T2 or T3 | When an application generates many connections to smaller RDS instances, it might experience out-of-memory errors and CPU overhead | Using RDS Proxy can help avoid out-of-memory conditions and reduce the CPU overhead associated with establishing connections |

| AWS Lambda functions can also be good candidates for using a proxy | These functions make frequent short database connections to RDS, placing pressure on an RDS instance | RDS Proxy handles frequent short connections by pooling them. Moreover, it allows the use of IAM authentication for Lambda functions, eliminating the need to manage database credentials directly in the application |

| Applications that typically open and close large numbers of database connections and don’t have built-in connection pooling mechanisms | This can lead to surges in connections, especially if connections are being created at a fast rate | RDS Proxy enables applications to open many client connections while managing a smaller number of long-lived connections to the RDS instance |

| Applications that keep a large number of connections open for long periods | This might lead to the exhaustion of the connection pool in the RDS instance | With RDS Proxy, an application can keep more connections open than if it were connecting directly to the RDS instance |

| It is required for the application to ensure HA or FT | The application needs to survive RDS downtimes | RDS Proxy bypasses Domain Name System (DNS) caches to reduce failover times by up to 66% for Amazon RDS Multi-AZ DB instances. RDS Proxy also automatically routes traffic to a new database instance while preserving application connections, making failovers more transparent |

RDS PROXY CONCEPTS

CONNECTIONS

Since AWS RDS Proxy acts as intermediary between an application and a database, the applications connect to RDS proxy itself. Connections from an application to RDS Proxy are known as client connections. Connections from a proxy to the database are database connections. When using RDS Proxy, client connections terminate at the proxy while database connections are managed within RDS Proxy.

These are then main concepts:

Connection Pooling – Each proxy handles connection pooling for the writer instance of its associated RDS database. This technique optimizes database performance by reducing the overhead tied to opening and closing connections, as well as managing many connections simultaneously. This overhead includes memory usage for handling each new connection and CPU overhead for tasks like closing old connections and establishing new ones (e.g., TLS/SSL handshaking, authentication, capability negotiation, etc.). With connection pooling, your application logic is simplified, as there is no need to implement code specifically for minimizing the number of open connections at a given time.

Connection pool – the set of database connections that RDS Proxy keeps open and ready to be used by applications.

Endpoint – an RDS Proxy endpoint is the connection point that the application uses to interact with the database.

Target group – the group that represents the RDS DB instance or instances that the RDS Proxy can connect to. The target group contains the underlying databases that handle the application’s requests.

SECURITY

RDS Proxy can act as an additional layer of security between client applications and the underlying database. For instance, we can connect to the proxy using TLS 1.3, even if the underlying database instance only supports an older version of TLS. Additionally, RDS Proxy supports connections through IAM roles, even if the proxy itself connects to the database using traditional username and password authentication. This approach allows us to enforce stronger authentication requirements for database applications without requiring a costly migration effort for the DB instances.

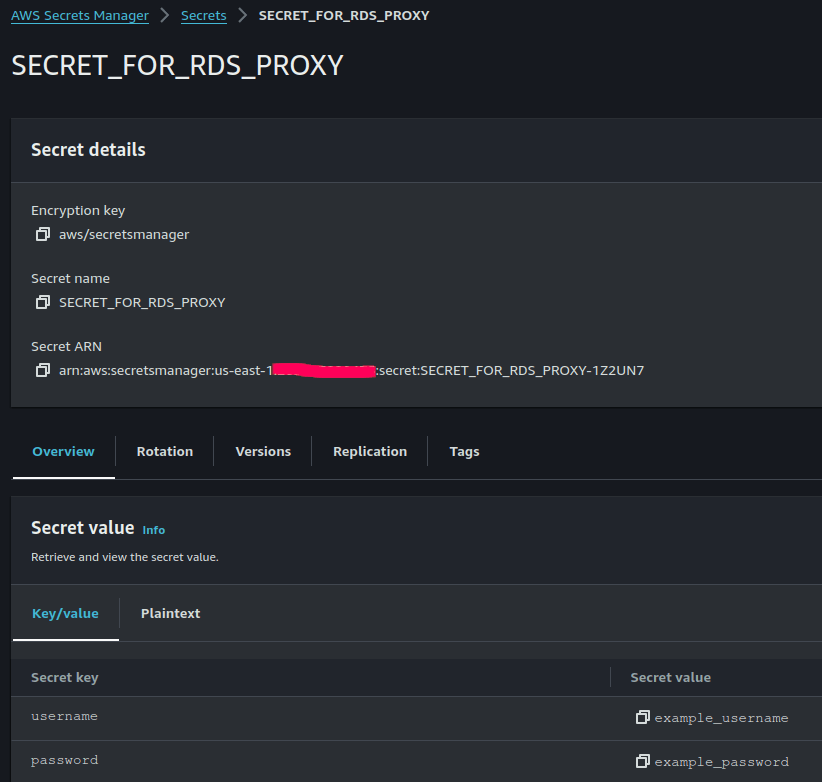

RDS Proxy leverages AWS Secrets Manager to store database credentials. For each RDS Proxy created, Secrets Manager must be utilized to store the username and password credentials for each database user account. A separate Secrets Manager secret must be created for every database user that the proxy will connect to on the RDS DB instance.

To establish a connection through the proxy as a specific database user, the password stored in Secrets Manager must match the database password for that user. The credentials provided by clients to RDS Proxy are matched with the stored secrets in Secrets Manager, and then used to establish connections to the underlying database (see AWS RDS Proxy FAQs). This means that clients authenticate themselves with RDS Proxy, which relies on Secrets Manager to maintain a secure database of credentials.

FAILOVER

Failover is a high-availability feature that automatically replaces a database instance with a standby one when the original instance becomes unavailable. This can occur due to a problem with the database instance or as part of scheduled maintenance, such as a database upgrade. Failover is specifically applicable to RDS DB instances configured for Multi-AZ.

Using an RDS Proxy improves the resilience of an applications during database failovers. When the primary DB instance goes down, RDS Proxy seamlessly connects to the standby instance without dropping idle application connections, which speeds up and simplifies the failover process. This approach minimizes disruptions, making it less impactful on an application compared to typical reboots or database issues.

For applications that manage their own connection pool, RDS Proxy ensures most connections remain intact during failovers or disruptions. Only connections actively engaged in a transaction or executing an SQL statement are terminated. RDS Proxy immediately accepts new connections during this time, and when the database writer is unavailable, it queues incoming requests.

For applications that don’t manage their own connection pools, RDS Proxy provides faster connection handling and supports more concurrent connections. It reduces the overhead on the database by reusing connections in its connection pool, significantly reducing the cost associated with frequent reconnects. This feature is particularly advantageous for TLS connections, where the overhead involved in establishing a secure connection can be costly.

TRANSACTIONS

All the statements within a single transaction always use the same underlying database connection. The connection becomes available for use by a different session when the transaction ends. Using the transaction as the unit of granularity has the following consequences:

- Connection reuse can happen after each individual statement when the RDS for MySQL

autocommitsetting is turned on. - Conversely, when the

autocommitsetting is turned off, the first statement you issue in a session begins a new transaction. For example, suppose that you enter a sequence ofSELECT,INSERT,UPDATE, and other data manipulation language (DML) statements. In this case, connection reuse doesn’t happen until you issue aCOMMIT,ROLLBACK, or otherwise end the transaction. - Entering a data definition language (DDL) statement causes the transaction to end after that statement completes.

RDS Proxy detects when a transaction ends through the network protocol used by the database client application. Transaction detection doesn’t rely on keywords such as COMMIT or ROLLBACK appearing in the text of the SQL statement.

In some cases, RDS Proxy might detect a database request that makes it impractical to move your session to a different connection. In these cases, it turns off multiplexing for that connection the remainder of your session. The same rule applies if RDS Proxy can’t be certain that multiplexing is practical for the session. This operation is called pinning. For ways to detect and minimize pinning, see Avoiding pinning an RDS Proxy.

All the statements within a single transaction always use the same underlying database connection. This connection is only made available for reuse by a different session once the transaction concludes. The transaction’s granularity introduces the following implications:

- When the autocommit setting is enabled (RDS for MySQL), connections can be reused after each individual statement.

- On the other hand, if autocommit is disabled, the first statement in a session starts a new transaction. For instance, when you issue a sequence of SELECT, INSERT, or UPDATE statements, the connection isn’t reused until you COMMIT or ROLLBACK the transaction.

- Issuing a data definition language (DDL) statement ends the transaction after its completion.

RDS Proxy identifies when a transaction ends using the network protocol of the database client, rather than relying on SQL keywords like COMMIT or ROLLBACK in the statement text.

In certain situations, RDS Proxy may detect a database request that makes it impractical to switch the session to a different connection. When this happens, multiplexing is disabled for that connection for the rest of the session. This mechanism is called pinning. If RDS Proxy cannot reliably determine whether multiplexing is feasible for a session, it will apply this pinning rule. For details on how to detect and minimize pinning, refer to the section on Avoiding pinning an RDS Proxy.

USE CASE SCENARIO

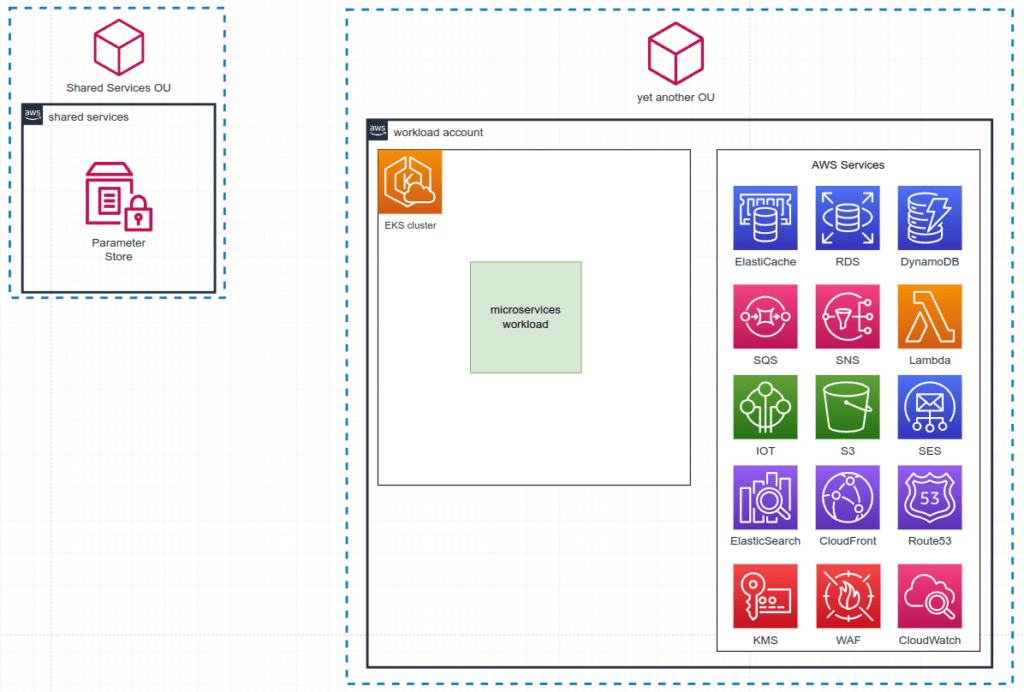

Let’s explore a multi-account infrastructure in AWS that leverages a variety of AWS services. The accounts are organized into several Organizational Units (OUs), each containing AWS accounts that extensively use services like EKS clusters for running microservices applications. These microservices require relational databases, and in our case, we use AWS RDS for storing data. The RDS authentication is user/password-based, meaning that each time a new database is created, corresponding users and passwords are generated. To centralize the storage of credentials and other essential variables for the microservices across all AWS accounts, we utilize AWS Systems Manager Parameter Store, which resides in the “Shared Services” AWS account.

The diagram below (Figure 2) illustrates the structure of this AWS multi-account setup.

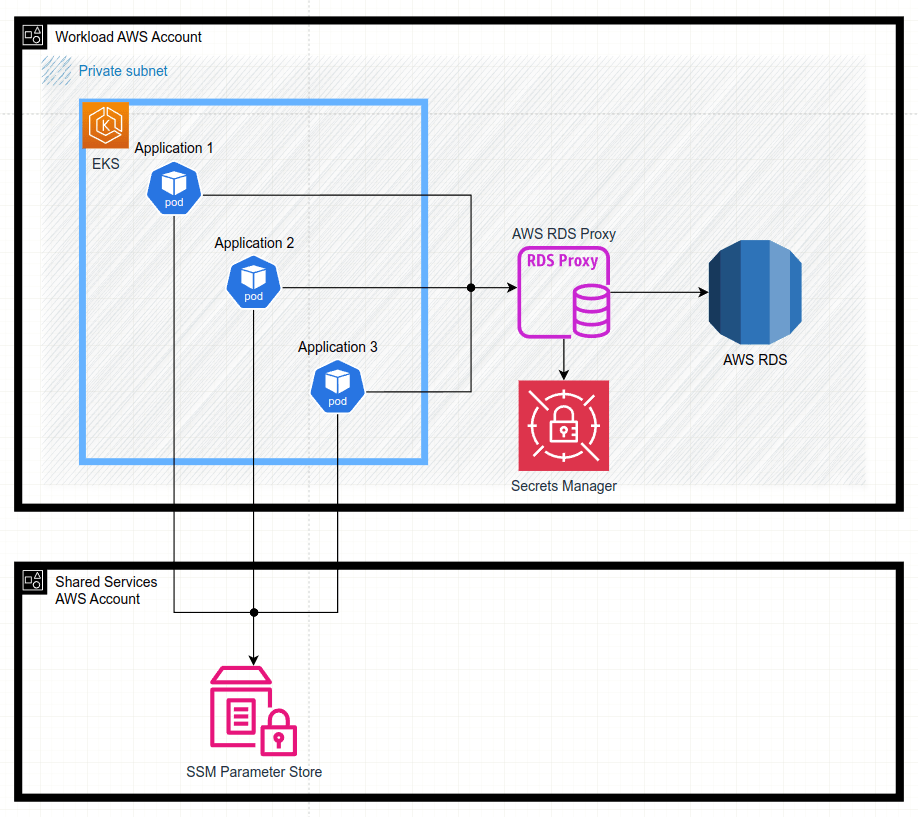

Now, let’s assume we encounter one of the scenarios discussed in the Where do we need AWS RDS Proxy? section. Consequently, we plan to integrate RDS Proxy to serve as an intermediary between the microservices running in the EKS cluster and AWS RDS.

Let’s examine the steps required for its implementation.

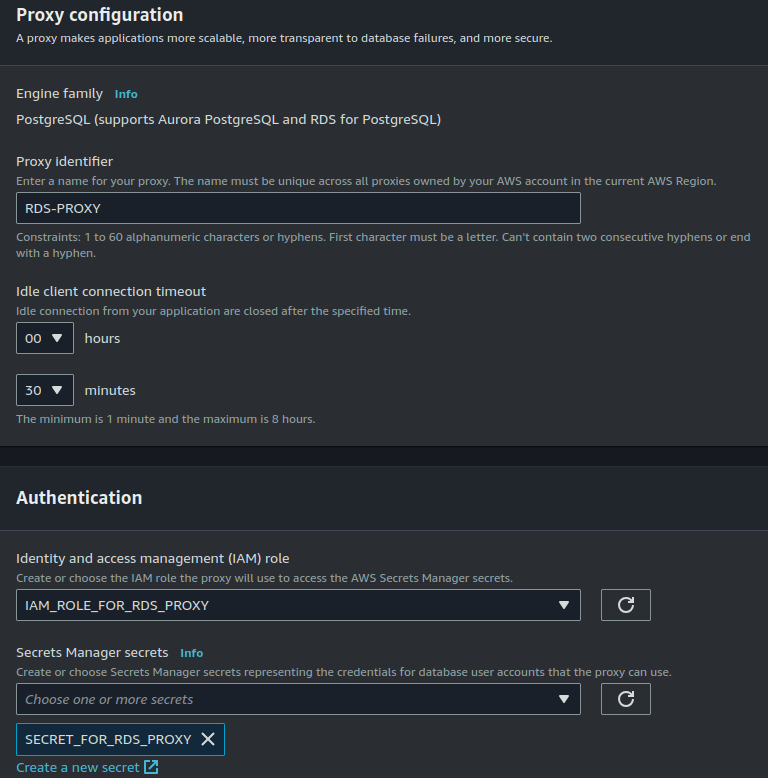

Since RDS authentication relies on user/password credentials, RDS Proxy must be configured with AWS Secrets Manager, as explained in the Security section. Specifically, we need to create as many secrets with corresponding passwords in Secrets Manager as there are applications. Additionally, we need to create and assign to RDS Proxy the IAM role with relevant IAM policies, which allows to read Secrets Manager secrets.

So, when a new microservice is introduced, the workflow steps are as follows:

- Create a new database in RDS along with the relevant user/password credentials.

- Add the corresponding parameters to AWS Systems Manager Parameter Store (along with any other required variables) in the Shared Service AWS account.

- Create an IAM role with the necessary policies for RDS Proxy, granting permission to list and get secrets in AWS Secrets Manager.

## IAM Role Policy for the "IAM_ROLE_FOR_RDS_PROXY" IAM role { "Version": "2012-10-17", "Statement": [ { "Action": "kms:Decrypt", "Condition": { "StringEquals": { "kms:ViaService": "secretsmanager.us-east-1.amazonaws.com" } }, "Effect": "Allow", "Resource": "arn:aws:kms:*:*:key/*", "Sid": "DecryptSecrets" }, { "Action": [ "secretsmanager:ListSecrets", "secretsmanager:GetRandomPassword" ], "Effect": "Allow", "Resource": "*", "Sid": "ListSecrets" }, { "Action": [ "secretsmanager:ListSecretVersionIds", "secretsmanager:GetSecretValue", "secretsmanager:GetResourcePolicy", "secretsmanager:DescribeSecret" ], "Effect": "Allow", "Resource": "arn:aws:secretsmanager:us-east-1:<account_id>:secret:SECRET_FOR_RDS_PROXY", "Sid": "GetSecrets" } ] } ## Trusted entities { "Version": "2012-10-17", "Statement": [ { "Sid": "RDSAssume", "Effect": "Allow", "Principal": { "Service": "rds.amazonaws.com" }, "Action": "sts:AssumeRole" } ] } - Store the appropriate secret in AWS Secrets Manager.

- Configure secret’s resource policies to enable access from the RDS Proxy IAM role.

{ "Version" : "2012-10-17", "Statement" : [ { "Sid" : "RDSProxyReadWrite", "Effect" : "Allow", "Principal" : { "AWS" : "arn:aws:iam::account_id:role/IAM_ROLE_FOR_RDS_PROXY" }, "Action" : [ "secretsmanager:GetSecretValue", "secretsmanager:DescribeSecret" ], "Resource" : "arn:aws:secretsmanager:<aws_region>:<account_id>:secret:SECRET_FOR_RDS_PROXY" } ] } - Set up the RDS Proxy and link it to the secret in AWS Secrets Manager during configuration.

- Update the microservice to retrieve database connection credentials from AWS Systems Manager Parameter Store.

With these configurations in place, microservice applications can connect to the RDS proxy endpoint without directly accessing the RDS

Conclusion

To sum it up, RDS Proxy serves as a robust solution for improving database management in AWS cloud. It boosts performance by handling connections efficiently and cutting failover times, while keeping things secure with AWS Secrets Manager. By putting these best practices in place, we ensure our microservices in EKS can safely connect to databases without dealing with RDS directly.

By Pavel Luksha, Senior DevOps Engineer.