TABLE OF CONTENTS

Introduction

Amazon Web Services (AWS) has long been at the forefront of cloud computing, providing scalable, reliable and cost-effective solutions to businesses around the world. Each year AWS hosts a series of global events such as AWS Summits and re:Invent that bring together developers, engineers and decision makers to learn about the latest cloud innovations, best practices and customer success stories. In May 2025 AWS Summit Poland took place in Katowice, offering an invaluable opportunity for both newcomers and professionals to deepen their knowledge of AWS services, explore hands-on workshops and network with industry peers.

As someone who has never attended such a large-scale tech summit before, I was eager to experience this event for the first time. Over the next few sections I will share my impressions of AWS Summit Poland 2025 – from the keynote sessions and breakout talks to the interactive demo booths and informal meetups. Whether you couldn’t make it this year or are simply curious about what happens behind the scenes, I hope this report will give you a clear, honest look at what made the summit so special.

Arrival, Registration, Agenda and First Impressions

To attend the summit you simply register online and show up on the assigned day and time. Before the event AWS published an agenda, listing over 90 sessions of various types and levels, including 52 themed sessions, 19 workshops, and 35 customer case studies, all running from 09:00 until 18:00. To make it easier to choose which session to attend, AWS provides the AWS Events app. I found it very convenient because I could easily filter sessions by topic, mark the ones I was most interested in and plan my day in advance. The picture below shows a piece of agenda.

Upon arrival I picked up my badge, settled in, and prepared for a full day of learning.

The area outside the main hall was lively. People checked their schedules, sipped coffee and explored the area. Screens showed agenda and schedules while staff handed out orange AWS lanyards and blue Partner badges. Here are a few photos to set the scene and capture the summit’s atmosphere early on.

Opening Breakout – Kubernetes Scaling Made Simple

I began my day at 10.00 AM with the “APP302 | Simplify Kubernetes Workloads with Karpenter & Amazon EKS Auto Mode” session, conducted by AWS Senior Solutions Architects Michal Adamkiewicz and Michal Gawlik.

When working on Klika Tech’s Quext project we rely heavily on Amazon EKS, so it is essential to constantly learn about the latest AWS improvements in this area. Karpenter can simplify EKS cluster management, reduce costs and strengthen infrastructure – so these types of sessions I found essential to attend. If you’d like to read more about Karpenter and how we onboarded it to the infrastructure in the year 2024, see the AWS EKS Nodes Lifecycle Management with Karpenter article.

During the session, the presenters reviewed how EKS worker nodes scale – beginning with the older Cluster Autoscaler, transitioning to Karpenter and concluding with a pitch on EKS Auto Mode and its benefits for managing a cluster. I found EKS Auto Mode a very promising solution and look forward to applying it to our project’s infrastructure.

AWS Summit Poland 2025 Keynote

The next event was the AWS Summit Poland 2025 Keynote – a special presentation that was delivered by Andrzej Horawa, AWS Country Manager in Poland and Slavik Dimitrovich, Director AI/ML and GenAI Specialist Solutions Architecture. AWS leaders shared how AWS is growing in Poland and how key products are evolving – AI assistants, SageMaker, Bedrock, Graviton CPUs and S3 Tables. They emphasized the key idea that AWS aims to provide all the necessary building blocks people need to bring their ideas to life, showcasing creativity and innovation in many areas, including healthcare, finance, retail, sports and accessibility. AWS builds services that do one job extremely well, allowing customers to combine them flexibly.

According to Slavik Dimitrovich, AWS’s innovation relies on two critical foundations: security and scale.

- Security is embedded at the silicon level with custom-built hardware and virtualization layers that ensure complete customer control over data. Customer data is fully isolated and protected in a way, that not even AWS can access it without explicit authorization.

- Scale is achieved through AWS’s vast global infrastructure, including over 6 million kilometers of fiber optic cables, which connect all regions and availability zones. In the past year alone, AWS expanded its network backbone by over 80%, enhancing throughput and reducing latency worldwide.

He also mentioned about evolution of Compute Services, which offers 850+ EC2 instance types that cater to diverse needs, from standard web applications to high-performance computing (HPC) and in-memory databases of up to 32 TB.

Slavik noted that AWS is a market leader in GPU hosting due to a 14-year collaboration with NVIDIA, and NVIDIA chose AWS for their generative AI cloud-based supercluster.

However, AWS isn’t solely focused to simply lease hardware from others. Their Graviton 4 chip is now the most powerful CPU they’ve built, boasting:

- Up to 45% better performance (especially for Java apps)

- 40% faster for database applications

- Graviton 4 is 30% faster than Graviton 3

- 60% less energy usage compared to traditional x86 chips

Graviton is now the most commonly deployed chip in AWS’s data centers.

On the AI side, AWS’s Trn2 (Tranium 2) chip powers intensive deep learning workloads. Compared to its predecessor, it’s:

- 4× faster

- 3× more energy-efficient

A notable example was Anthropic’s “Project Reineer” – a massive AI training cluster using hundreds of thousands of Trn2 chips. This cluster aims to push the boundaries of generative AI performance.

Dimitrovich also mentioned, that AWS revisited the evolution of its storage services. What began as a solution to solve internal complexity (too many teams building their own storage) has become the backbone of the internet, with over 400 trillion objects stored in Amazon S3. But nowadays it’s not just about storing files, there’s a growing need to analyze, mutate and query tabular data at scale. That’s where AWS’s support for Apache Iceberg comes in – an open-source format that’s optimized for big data analytics.

To simplify Iceberg management, AWS introduced:

- S3 Tables – New bucket types that natively support tabular data operations.

- S3 Metadata – Automatically collects and makes system/custom metadata queryable using SQL.

A fun example was given: taking photos of food and tagging them with restaurant names and impressions – all of that data is searchable thanks to metadata. A small example of how this can feed into AI and analytics pipelines with zero infrastructure overhead.

The keynote also included two customer success stories showing how AWS solutions help companies compete and deliver top-quality products. Magdalena Niedzielska, Deputy Director of IT, Shared Services at LOT Polish Airlines, explained how they cut their release cycle from three months to two weeks and Michal Smolinski, Co-founder and CTO of Radpoint, demonstrated how AWS services form the foundation for SaaS solutions used by radiologists in medicine.

Expo Hall

After the keynote I explored the main exhibition space. It was a large hall filled with different company booths showcasing how they use AWS solutions in their products.

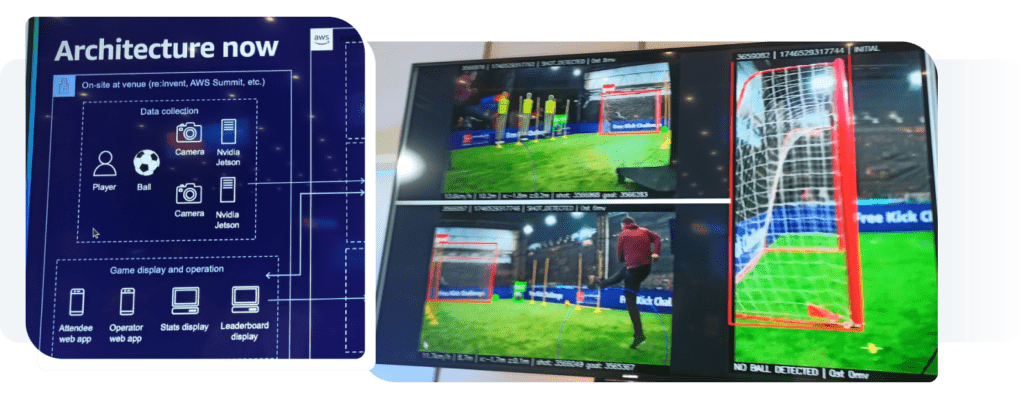

One demo I especially enjoyed involved a small football goal. Anyone could kick a ball toward the goal while two cameras recorded the shot. The video feed was sent to AWS, where the ball’s speed was calculated and participants were ranked by velocity. The diagram below shows which AWS services power this solution.

AWS WAF Deep Dive and Data Transfer Costs Sessions

After a quick dinner break I attended a “I didn’t know AWS WAF did this“ session – the session was about AWS WAF and its threat-mitigation features for web application security. An AWS security engineer from Docplanner (ZnanyLekarz.pl) showed how to deploy perimeter protection services at scale to protect web apps and enforce compliance with usage policies.

Next, after a 15-minute break I joined a very informative talk on common network architectures and their data-transfer costs (“Understand and Optimize Data Transfer Costs“). We started with simple designs featuring an ALB and a few subnets using Internet Gateways and NAT Gateways. Next we looked at VPC endpoints and peering before finishing with a complex multi-region setup connected by AWS Transit Gateway, aiming to focus on the cost savings on each step while building an architecture. At each step the presenter displayed a table of per-gigabyte charges for different directions (intra-AZ, cross-AZ, internet, peering, etc.). The key takeaway was that architecture decisions influence not only reliability but also recurring costs significantly. For example, replacing NAT Gateway egress with PrivateLink can slash monthly fees if an app speaks to many AWS-managed public services’ endpoints.

Deep Karpenter Case Study

Next, I decided to delve deeper into AWS EKS and Karpenter at the session “Maximizing Efficiency with Karpenter – A Miro Path to Perfection.” The presenters Martin Drewes, Partner Solutions Architect ISV EMEA at AWS and Rodrigo Fior Kuntzer, Staff SRE at Miro began by explaining Kubernetes scaling – both horizontal and vertical for application pods and for the data plane.

Then we discussed how third party tools such as KEDA and Kyverno could help us in the event based scaling. The key point is that, in some event-based architectures, it is far more efficient to scale containerized applications on AWS EKS based on queue message counts rather than CPU or memory load.

For example, as shown in the KEDA manifest below we can monitor an SQS queue and set a length threshold that triggers pod scaling when the threshold is reached.

We also saw a detailed case study of how Miro boosted Kubernetes scalability and efficiency with Karpenter. The speakers revisited the pain points of native EKS autoscaling, then showed how Karpenter’s smarter resource allocation handles mixed workloads and cuts costs.

Well-Architected Framework

Before heading back to the railway station I stopped by the Training & Certification stage. AWS instructors walked through the Well-Architected Framework’s six pillars, then showed how the Well-Architected Tool surfaces high-risk issues and recommends improvement plans. We explored how the AWS Well-Architected Framework delivers clear architectural guidance and how the AWS Well-Architected Tool lets teams measure and refine their entire technology portfolio.

Conclusion

Overall, it was a long, demanding, yet inspiring day. I learned how global carriers shorten release cycles, how startups deliver medical imaging from the cloud and how a few YAML lines let Karpenter purchase exactly the capacity pods needed, aiming to control costs while improving efficiency. The AWS community energy is infectious – I left determined to pilot EKS Auto Mode, experiment with KEDA scaling and schedule a review for our data transfer costs.

For a first-time attendee, AWS Summit Poland was more than slides and swag. It was a reminder that technology advances fastest when knowledge is shared in person. Next time I plan to return not only as a listener but, perhaps, as a speaker ready to give back.

By Pavel Luksha, Senior DevOps Engineer, Klika Tech, Inc.